Hype of Generative AI

Generative AI is not just a fleeting trend; it's a transformative force that's been captivating global interest. Comparable in significance to the dawn of the internet, its influence extends across various domains, altering the way we search, communicate, and leverage data. From enhancing business processes to serving as an academic guide or a tool for crafting articulate emails, its applications are vast. Developers have even begun to favor it over traditional resources for coding assistance. The term Retrieval Augmented Generation (RAG), introduced by Meta in 2020 1, is now familiar in the corporate world. However, the deployment of such technologies at an enterprise level often encounters hurdles like task-specificity, accuracy, and the need for robust controls.

Why enterprises struggle with Industrializing Generative AI

Despite the enthusiasm, enterprises are grappling with the practicalities of adopting Generative AI.

According to a survey by MLInsider:

- 62% of AI professionals continue to say it is difficult to execute successful AI projects. The larger the company, the more difficult it is to execute a successful AI project.

- Lack of expertise, budget, and finding AI talent are the top challenges organizations are facing when it comes to executing ML programs.

- Only 25% of organizations have deployed Generative AI models to production in the past year.

- Of those who have deployed Generative AI models in the past year, several benefits have been realized. About half said they have seen improved customer experiences (58%) and improved efficiency (53%).

In summary, Generative AI offers massive opportunities to enterprises, but due to skills, requirements for enterprise security and governance, they are still behind in the adoption curve.

Industrialization of Generative AI applications

The quest for enterprise-grade Generative AI applications is now easier, thanks to SaaS-based model APIs and packages like Langchain and Llama Index. Yet, scaling these initiatives across an enterprise remains challenging. Historical trends show that companies thrive when utilizing a centralized platform that promotes reusability and governance, a practice seen in the formation of AI and ML platform teams.

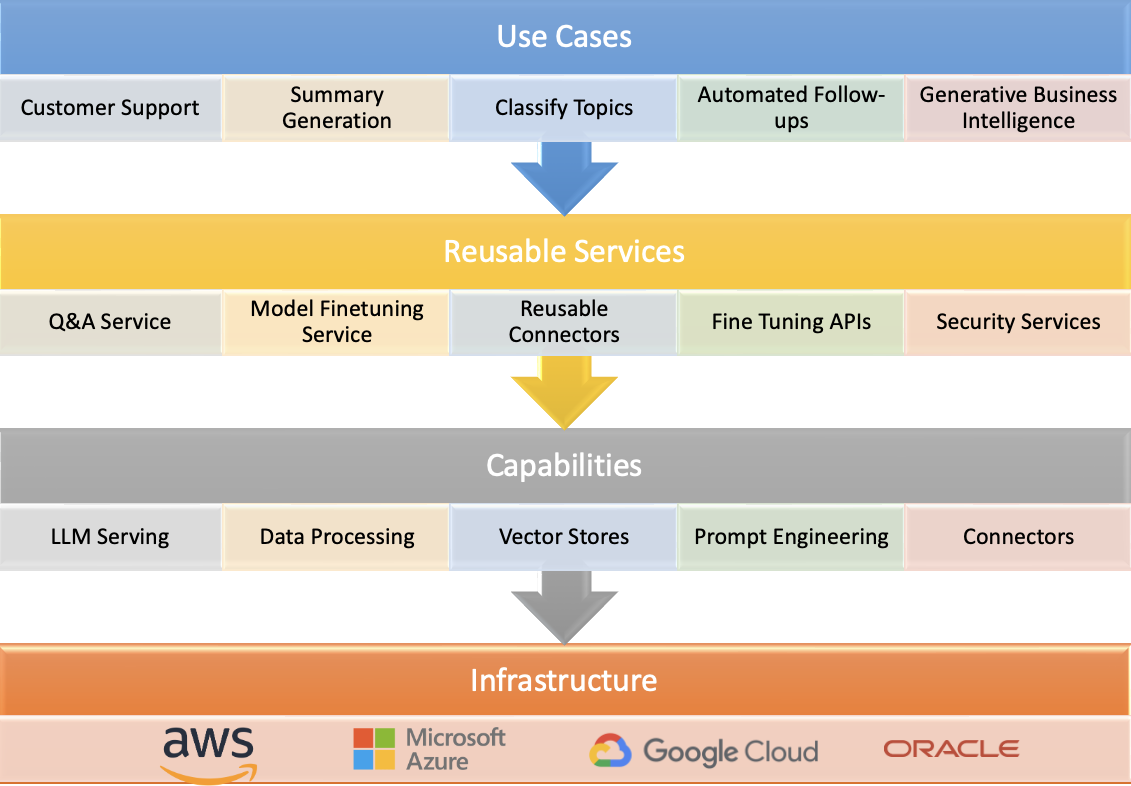

Enterprises should think about Gen AI platforms with the above four-layered cake:

- Infrastructure

- Most companies have a primary cloud infrastructure and typically utilize Gen AI building blocks offered by the cloud.

- Capabilities

- These are a set of foundational building block services offered by cloud-native (e.g., Opensearch, Azure OpenAI) or third-party SaaS products (e.g., Milvus Vector search).

- Reusable services

- Central Gen AI teams typically have to build a RAG (Retrieval Augmented Generation), Fine Tuning, or Model Hub Services that can be readily consumed with enterprise guardrails.

- Use cases

- Using the reusable services, use cases can be deployed and integrated with a variety of applications such as Customer support bot, summarizing customer reviews, and more.

Many Data, ML, and AI vendors are snapping these capabilities on top of their existing platforms. ML Platforms that start with supervised labels and depend on the model building & deployment aspect of MLOps, Generative AI platforms begin with a pre-trained Open source model (e.g., Llama2) or proprietary SaaS model (GPT4), focusing on capabilities to contextualize Large Language Models and deploy capabilities to enable smarts in applications such as Copilots or Agents. Hence, we propose a radically different approach to fulfilling the promise of industrialized Gen AI that focuses on the LLMOps development loop (Connect to Model Hub -> Contextualize Model for Data -> Human Evaluation).

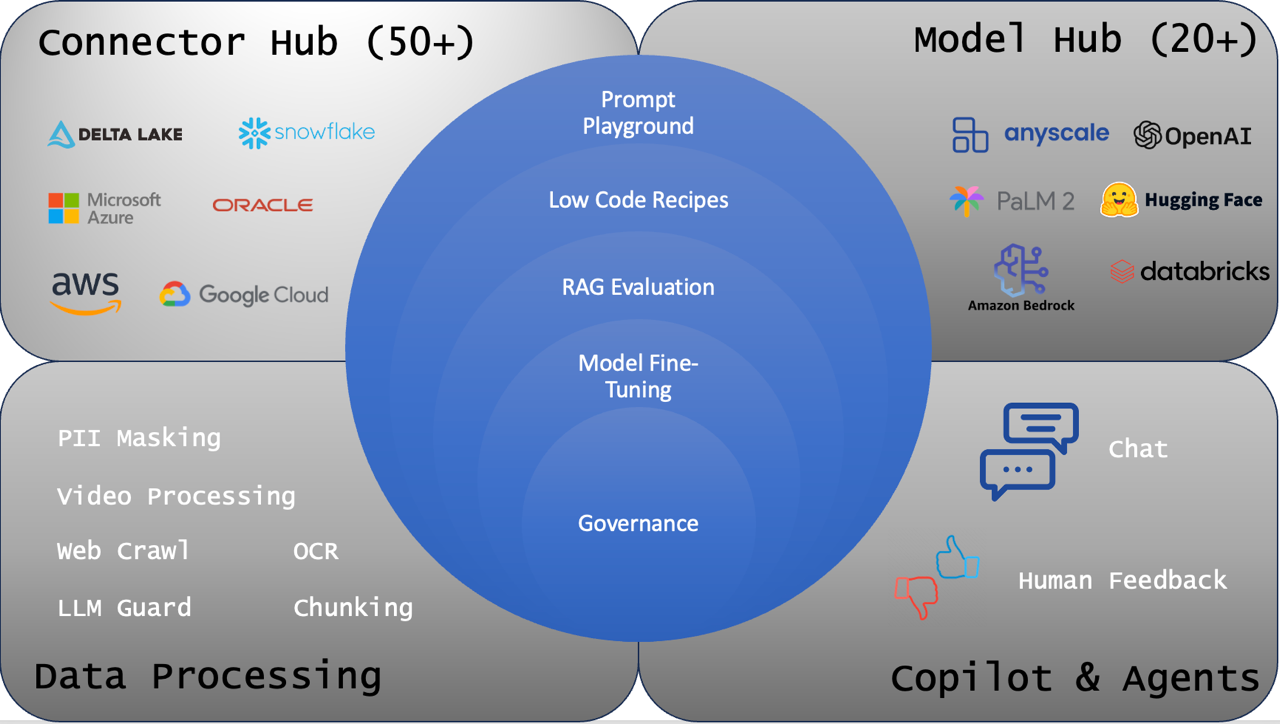

Introducing Generative AI Platform for all

Karini AI presents "Generative AI platform," designed to revolutionize enterprise operations by integrating proprietary data with advanced language models, effectively creating a digital co-pilot for every user. Karini simplifies the process, offering intuitive Gen AI templates that allow rapid application development. The platform offers an array of data processing tools and adheres to LLMOps practices for deploying Models, Data, and Copilots. It also provides customization options and incorporates continuous feedback mechanisms to enhance the quality of RAG implementations.

Conclusion

Karini AI accelerates experimentation, expedites market delivery, and bridges the generative AI adoption gap, enabling businesses to harness the full potential of this groundbreaking technology.